Mistral AI Raises $415M and Releases New LLM as Free Torrent

French generative AI startup Mistral AI has closed a €385 million ($415 million) Series A funding round led by Andreessen Horowitz seven months after emerging from stealth with a $113 million seed round. The large language model (LLM) developer also debuted its new Mixtral 8x7B LLM, an ‘open-weight’ model that it claims sets new standards for performance and opened access to its commercial platform.

Mistral Mixtral

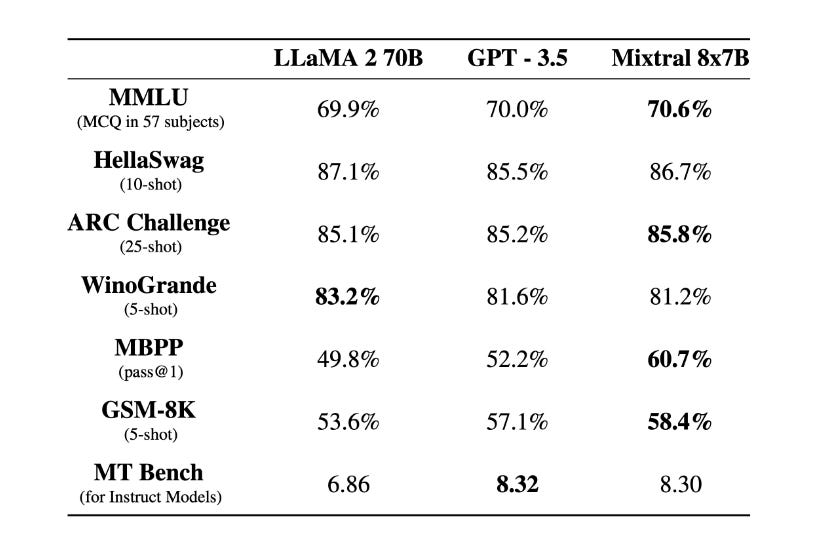

Mistral showcased how its new model, called Mixtral 8x7B, performs compared to Meta’s Llama 2 and OpenAI’s GPT-3.5 models. The tests point to Mixtral performing as well or even better than the two other option and with lower costs and latency. There is one notable absence from the test list, TruthfulQA, which is usually cited for testing an LLM’s ability not to repeat common online misinformation. It’s a somewhat glaring omission when Mixtral 8x7B does so well otherwise. Even so, Mistral is keen to highlight how much less its model costs to run compared to the OpenAI and Meta options.

Mistral showcased how its new model, called Mixtral 8x7B, performs compared to Meta’s Llama 2 and OpenAI’s GPT-3.5 models. The tests point to Mixtral performing as well or even better than the two other option and with lower costs and latency. There is one notable absence from the test list, TruthfulQA, which is usually cited for testing an LLM’s ability not to repeat common online misinformation. It’s a somewhat glaring omission when Mixtral 8x7B does so well otherwise. Even so, Mistral is keen to highlight how much less its model costs to run compared to the OpenAI and Meta options.

“Mixtral is a sparse mixture-of-experts network. It is a decoder-only model where the feedforward block picks from a set of 8 distinct groups of parameters. At every layer, for every token, a router network chooses two of these groups (the “experts”) to process the token and combine their output additively,” Mistral explained in a blog post. “This technique increases the number of parameters of a model while controlling cost and latency, as the model only uses a fraction of the total set of parameters per token. Concretely, Mixtral has 45B total parameters but only uses 12B parameters per token. It, therefore, processes input and generates output at the same speed and for the same cost as a 12B model.”

Mixtral 8x7B is similar to the Mistral 7B model that preceded it as both are under an open Apache 2.0 license, aiming to demonstrate the capabilities of even modestly-sized open-source LLMs for generative AI projects. The model can be downloaded directly as a torrent, with the link posted by Mistral on its X (formerly Twitter) page. The company has argued that open-source generative AI aligns better with its principles, including transparency, customizability, and preventing misuse.

“Since the creation of Mistral AI in May, we have been pursuing a clear trajectory: that of creating a European champion with a global vocation in generative artificial intelligence, based on an open, responsible, and decentralized approach to technology,” Mistral AI CEO Arthur Mensch said in a statement.

The open-source rhetoric doesn’t mean Mistral AI is shying away from commercialization. The launch of its developer platform in beta signifies a strategic move to monetize its AI models through API access. So, while Mistral’s two models can be downloaded directly, Mistral’s new La Platforme provides a home for all of the current and upcoming LLMs. The platform will eventually offer three chat models, labeled Tiny, Small, and Medium, tied to Mistral 7B, Mixtal 8x7B, and an as-yet unrevealed Mistral Medium model, respectively.

Follow @voicebotaiFollow @erichschwartz

Generative AI Startup Mistral Releases Free ‘Open-Source’ 7.3B Parameter LLM

Perplexity Debuts 2 Online LLMs with With Real-Time Knowledge